No AI in Agents

LLMs and Agents are orthogonal concepts

Depending on your current level of expertise with AI, the above statement may sound either profoundly controversial or obviously banal. My own interest in the field of AI has followed a more or less inverse relation with the hype and popularity around it. Now, as the AI bubble appears to be in the final stages of its cycle (or perhaps not), my interest in it has intensified, with a particular focus on cutting through the hype and understanding the core concepts underlying it.

But what is an agent?

To make sense of my statement, we first need to have a clear definition of what an “agent” is. This was purposefully vague and ambiguous during the initial months when companies began marketing “AI agents” and “agentic AI” (btw, those two terms are not interchangeable, but that’s a story for another day). In the same way as literally anything else, if you give a clear label to something, you take away some of its power over you, and if people knew what an “agent” actually means, they wouldn’t be hyping it up so much.

In this blog, I aim to develop a natural definition of agents from the ground up in a natural manner. Along the way, we will also see some of the history of agents (yes, it is actually a really old field) and why “AI agents” have become so popular recently.

An Initial Attempt

As is common in the English language, the word “agent” is overloaded with meanings. Not every meaning is relevant to this discussion (no undercover agents here!), but the most useful starting point I found is the way the Cambridge Dictionary defines it:

agent: noun

a person or thing that produces a particular effect or change

This definition gets to the heart of the kind of agents that we are after today: more than anything else, they are agents of change. They do something that produces a side effect or result in the real world.

When we refer to agents in the context of computers, we typically mean software automations that can perform specific tasks and generate results. Thus, we can start with an initial definition for a software agent as follows:

agent

an automation that can perform a certain task and bring about a change in the state of a computer system

Although it looks good, those who have done some functional programming will realise that this definition is somewhat trivial, as any program will perform a task (no matter how trivial) and bring about a change in the system (even I/O is a change of state). By this logic, even your plain old ls command is an agent!

Clearly, we need something more than. Let’s look at some examples to build a more useful automation. From my experience, it is more helpful to look at examples that are not agents, so we will keep narrowing our definition until it excludes things we don’t see as agents and only includes the good stuff.

Goal-based Repetition

A simple marker to distinguish agents and non-agents is repetition: an agent is capable of repeating the same actions, while a non-agent will execute once and then be done with it.

The biggest difference between a simple automation and an agent is that an agent has goals, and it keeps working until that goal is achieved. We can update our definition to be:

agent

an automation that can perform certain tasks as many times as possible to achieve a certain goal and bring about a change in the state of a computer system

Simple programs, like Linux commands, are excluded by this definition. They are incapable of repetition and don’t have any goals as such. Even programs that repeat, like cron jobs, are not agents as they don’t have goals. Their procedure is well-defined and hard-coded.

What about programs like SQL? They are often written as what is to be obtained and leave the details of obtaining it to the underlying query optimizer and execution engine. Or take some programs written in Haskell; they can be very declarative in nature. They specify what is wanted but don’t explicitly specify how to do it.

For example, here is quicksort implemented in declarative Haskell:

quicksort :: Ord a => [a] -> [a]

quicksort [] = []

quicksort (x:xs) = quicksort smaller ++ [x] ++ quicksort larger

where

smaller = [a | a <- xs, a <= x] -- “the set of elements <= x”

larger = [b | b <- xs, b > x] -- “the set of elements > x”

-- We're describing properties of the result, not iteration stepsWe are at one level above basic programs and one step towards true agents. But these automations still have very little “agency” of their own: the logic is hard-coded. This brings us to the next important ability of agents.

Context-Awareness

Where is the line drawn between an automation tool and an agent? The answer is: it depends. One thing I learnt is that “agency” is not a binary term. There are various shades of agency down the line, right from the simple programs with no agency to the future AGI that will have all of it.

Right now, let’s advance a bit in the agency axis, which is achieved by having some context-awareness. Normal programs have completely hard-coded logic and can’t do anything outside their programming. Technically speaking, neither can humans, but practically, ML programs provide a lot more flexibility, and programs often craft their own logic and update it according to the data that is given to them.

The difference between a cron job and an agent is, among other things, a sense of context. The context-awareness of a program is directly proportional to its agency. In light of this, we can modify our earlier definition as follows, which I’ll now write in a point-wise format for ease of reading.

agent

a context-aware automation that can

make and update its goals based on the surrounding context

perform certain tasks as many times as possible to achieve a certain goal

bring about a change in the state of a computer system

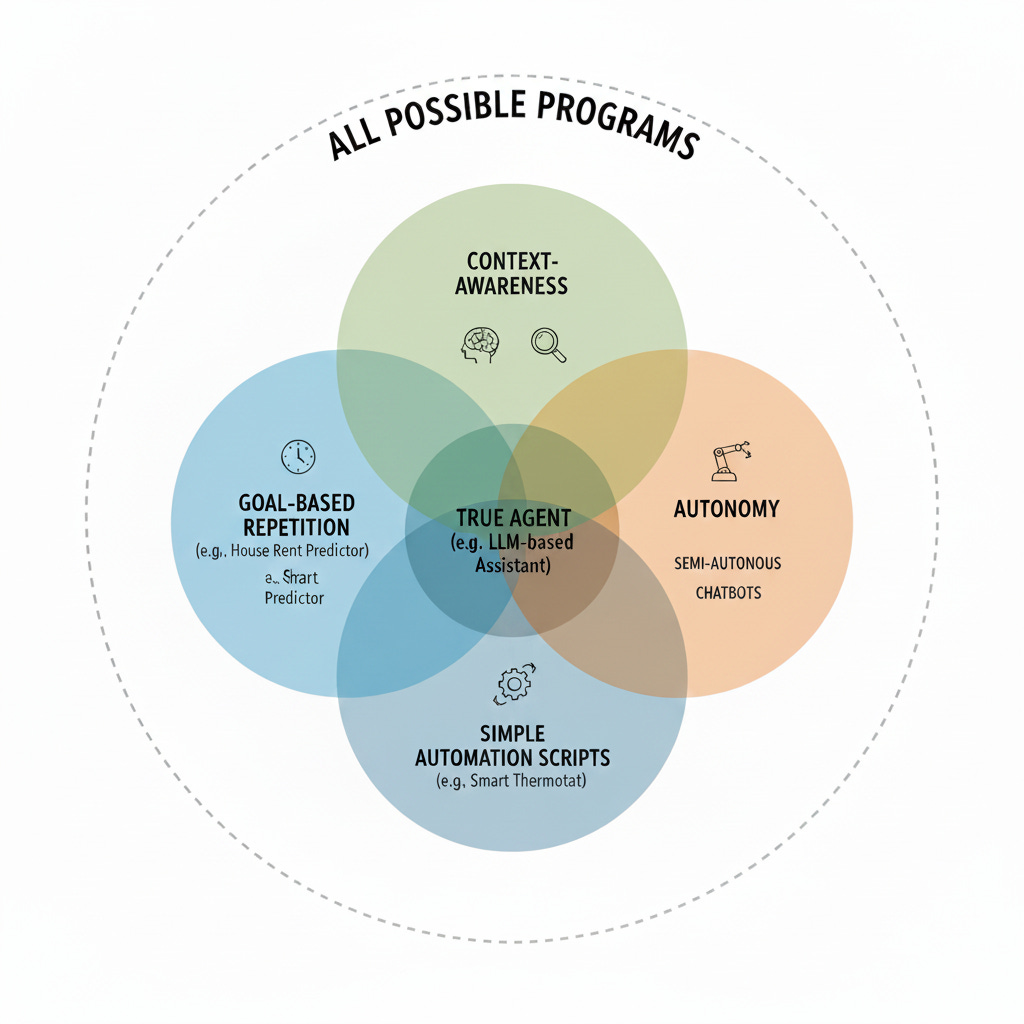

This excludes a large number of automations from the category of agents. Or, depending on how you look at it, this is just another level of classification after goal-based repetition. The way I like to look at it is to imagine a collection of all possible programs as the universal set in a Venn diagram. The different features are like circles intersecting with each other. At places where more circles intersect, agency increases.

Mostly, programs that have some sort of context awareness are machine learning programs. They can learn from data and modify their logic as per context (and this need not be an AI). But not every ML program is an agent. The classic example of a house rent predictor is not an agent, at least it does not feel like one. This is because what it primarily does is reading and summarising, not on text but numbers, to come up with an equation that predicts the rent trends accurately.

What is missing is the ability to modify data. There comes the final and, I would say, the most important aspect of an agent: autonomy. Context-awareness gives an agent flexibility; autonomy gives it initiative.

Autonomy

Imagine any kind of useful agent, like a chatbot on a train ticket booking website. You tell it the date you want to travel, the source and destination, and leave it to the agent to find the optimal train journey for you.

Imagine if at every step, the agent just read the data and gave it to you for selection:

> I want to book a train from A to B next Monday

Sure! Let me make an optimal booking...

I found these trains running next Monday. Which one should I book?

...<train schedule>...

> Um, let's go with the first one.

Sure, here is the link to book it. Can you click on this button?

[ Book ]

> <clicks button>

Here are the available seats! Which seat should I select?

> How about a window-side one?

Sure! Here is the list of window-side seats! Which seat do you want?

> ...Let's go with seat 42.

Sure, can you click on this button to select the seat?

[ Button ]

> ...You know what? I'll just book it myself, thanks.

You can see that the program is read-only. For any write operation, it has to go through the user. This has its pros; you don’t want your agent to swipe your credit card without your knowledge, but too much back-and-forth is inhibiting the purpose of having an agent in the first place. Certain things, like the optimal train and seat selection, should be left to the agent; it should ask my permission only before paying through my card. The agent should be autonomous, or at least semi-autonomous.

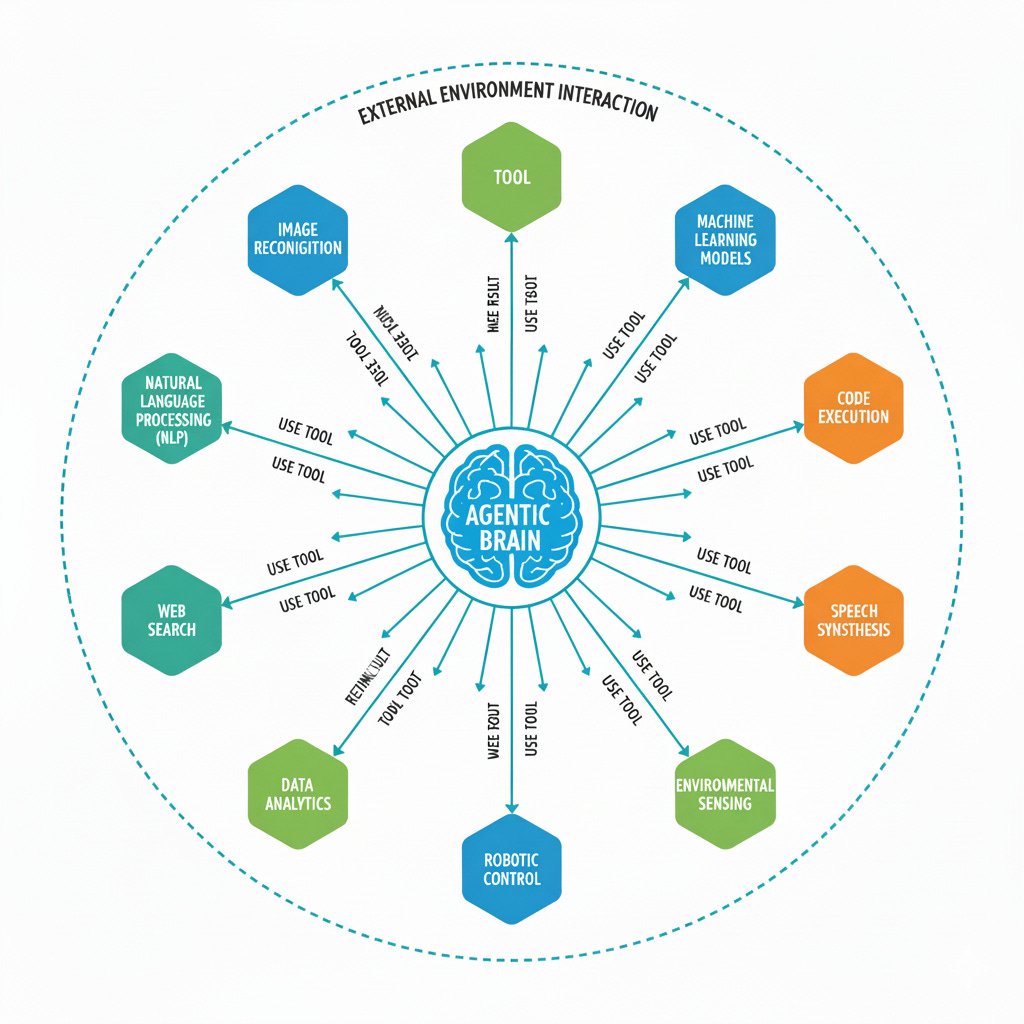

If an agent has autonomy, it can perform more tasks than just reading and summarization. It can search through data for a particular information, update data, and perform a series of actions in any order before outputting the final result to the user. This gives rise to something similar to a client-server architecture, which I call the brain-tool architecture for agents.

Brain-tool Architecture

The brain-tool architecture applies to most LLMs. Think of it like this: we humans interact with our environment via sense organs and limbs, which are our tools, and we process the information via a literal brain. Similarly, agents take in information through input tools, process that information in their brain, and interact back with the environment through output tools. In the course of processing information, they can invoke other tools, perhaps to search online or to modify some existing data. It feels analogous to how we operate in life.

These tools can be as simple or as complex as required. The ls command by itself is not an agent. But it can be used as a tool by an agent. At a high level, an agent itself is a tool that can be called by another agent.

We need one more bit to finalize things. Although not required for simple, reactive agents, most useful agents have some sort of memory. It wouldn’t be very useful if you have to remind your chatbot about your past selections and preferences all the time, not to mention having to enter your card number again at the time of payment.

With this small addition, we come to the full, no-hype definition of an agent.

agent

a semi-autonomous, context-aware automation that can

make and update its goals based on the surrounding context

perform certain tasks as many times as possible to achieve a certain goal

use tools to perform its tasks and interact with the outside world

bring about a change in the state of a computer system

remember past information and use that to make present decisions

tool

a callable piece of software with a well-defined interface that performs a state-changing or information-retrieving action, can be an agent itself

Since a tool can be an agent as well, this gives rise to agents calling agents, cooperating, and performing better than a single agent equipped with all the tools. This is the UNIX philosophy applied to agents: to make each agent do one thing and do it well. For example, one agent might be talking to you as a chatbot, trigger another agent to search for optimal trains, and yet another agent to complete payment. All agents work together in a workflow, and nowadays this is fancifully called “agentic AI” as opposed to “multiple AI agents”.

A (Very) Brief History of Agents

People started working on agents as early as the 1950s. The first breakthrough was made by Norbert Weiner, who came up with the theory that all intelligent behaviour was the result of feedback mechanisms. He subsequently developed the field of cybernetics, which saw one of the first goal-based agents (though they were not called agents back then) that sense their surroundings and respond autonomously.

The term “agent” itself came into vogue during the 1970s. Carl Hewitt designed the “actor model”. This laid out the foundation for distributed agent systems. Most agents back then had some sort of rule-based expert system as their brain. These were nothing more than a very elaborate chain of “if this then that” systems. They were mostly deterministic and could only perform in very specialized domains and conditions.

The field of agents continued to mature through the ‘80s and ‘90s. The first intelligent agents started coming around in the 2000s. Back then, reinforcement learning really took off as a way to train agents. In the 2010s, as deep learning boomed, agents continued to benefit from these advances, resulting in LLM-based agents that are popularly known as AI agents today.

LLMs as the Agent’s Brain

It is just that LLMs happen to be excellent brains for agents. AI agents, at their core, consist of an LLM and a set of tools. LLMs can do the reasoning part efficiently and naturally, and interact with the outside world via tools.

Seen like this, MCP is to agents what HTTP is to the client-server architecture. It provides a standard protocol of communication between the LLM brain and its tools. Many other AI programs, like RAG, can be simplified by this model; it is very general.

Conclusion

Hopefully, I could get across my message effectively. The field of agents is a very old one, and quite independent of AI. The notion that agentic AI boomed after generative AI is flawed; AI agents are a subset of a much more general category of agents.

Let’s end this with a Socrates-like philosophical quote, which has been true for me:

The more I explore AI, the more I realize that we aren’t building something entirely new; rather, we’re rediscovering old ideas, now amplified by scale, data, and more computational power

Until later.

If you like what I am doing, this is a great way to show appreciation!